Investigating IPEDS Distance Education Data Reporting: Progress Has Been Made

Published by: WCET | 4/18/2016

Tags: Distance Education, Enrollment, IPEDS, Research, U.S. Department Of Education

Published by: WCET | 4/18/2016

Tags: Distance Education, Enrollment, IPEDS, Research, U.S. Department Of Education

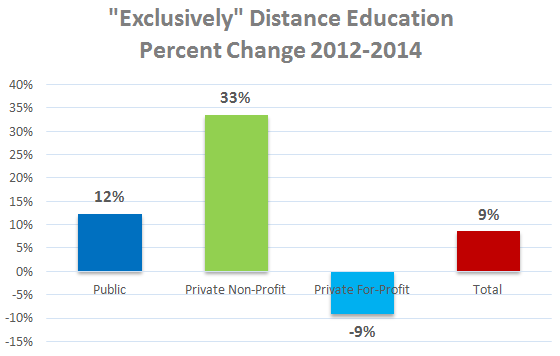

WCET conducted analysis on the Department of Education’s IPEDS (Integrated Postsecondary Education Data System) data since the initial release of distance education data for the Fall, 2012. Most recently, we produced a comprehensive report, WCET Distance Education Enrollment Report 2016, that analyzes trends in the distance education data reported between 2012 and 2014.

In the past, we’ve alerted to you to problems that some institutions had in reporting distance education enrollments to IPEDS. We’re glad to report that many have addressed those problems. A few institutions still maintain their own practices or are a complete mystery. Here’s what we found in revisiting the same problem children from the original IPEDS distance education enrollment reports.

Examining Possible Distance Ed Undercounts Since 2012 – Many Students Missing

In September, 2014 we wrote a blog post that explored discrepancies in distance education enrollments reported in the Fall 2012 IPEDS data. After being tipped-off by some WCET members as to problems they had in submitting data, we reviewed enrollments reports and identified 21 institutions with enrollment responses that seemed unusually low. We contacted representatives of those institutions to seek answers regarding whether the colleges reported all of their for-credit distance education enrollments for Fall 2012. If they did not, we asked about the size of the undercount and the reasons why enrollments were not reported.

The reasons for the undercounts fell into a two main categories.

As a result, we learned that the IPEDS numbers undercounted both distance education and overall higher education enrollments by tens, if not hundreds, of thousands of students.

Has Reporting Improved Over the Last Three Years?

With three years of IPEDS data now available for distance education, it seemed like a good time to revisit the challenges of early distance education data reporting. We wanted to see if the challenges of accurately reporting distance education enrollments for the colleges we investigated in 2014 persisted.

Improved Their Reporting

There has been improvement for some institutions. A large, public institutions in the southwest that offers multiple start dates and reported that it was not possible to accurately report fall enrollment to IPEDS with their current data management systems reported over a 70% increase in “Exclusively” Distance Education (DE) enrollments between Fall 2012 and Fall 2014. This at the same time the overall enrollment growth on the campus increased by 20%. This suggests that the staff at the school responsible for IPEDS reporting has found a way to collect and report data for the multiple start dates each fall.

A state university in the south that reported no “Exclusively” DE enrollments in 2012 reported 758 enrollments in Fall 2014, this at a time when total enrollments increased 2% for the institution. When asked about the lack of reporting in 2012, a representative indicated that they did not have the systems in place to accurately report DE enrollments, but that they were reporting accurate DE data beginning in 2013. The recent data indicates that they have put a system in place to report distance education enrollments.

A public system in the west reported a 25% increase in “Exclusively” DE enrollments while reporting a 3% decline in overall enrollments between Fall 2012 and Fall 2014.

While we refrained from naming most institutions in the 2014 blog, we did name the California State University system since it was their admitted issues with the IPEDS definition of “distance education” that triggered our interest in digging deeper into the data to understand the anomalies in reporting. In 2014, Cal State University system representatives freely admitted that they were only counting “state support enrollments” not the 50,000 students that were taking for-credit courses offered by their self-support, continuing education units.

Analysis of the changes in the Cal State system’s IPEDS reporting suggests that they have probably aligned their reporting with the Department of Education requirements. Total enrollments increased 2% between Fall 2012 and Fall 2014, while the “Exclusively” DE enrollments reported increased 60% in the same period.

A similar trend is evident when reviewing another multi-campus state university system in the west that is known to have invested heavily in national advertising in this timeframe. This institution reported a 13% increase in total enrollments and an 85% increase in “Exclusively” DE enrollments between Fall 2012 and Fall 2014. It is not clear how much of the enrollment growth in online is true increases and how much is attributable to changes in reporting.

Reporting Has Not Changed

Another large institution in the southwest, told us in 2014 that they used their own definition of distance education, not the IPEDS definition. A follow up with the contact revealed that they still use their own definition of distance education to report DE data to IPEDS. This institution reported a 22% increase in overall enrollments and a 35% increase in “Exclusively” DE enrollments between Fall 2012 and Fall 2014. So this enrollment growth is due to increased enrollments. The contact also warned that the DE enrollments are a small proportion of their total enrollments, so the percentage change can be misleading.

It’s a Mystery

A large private institution in the west strongly declined to talk to us in 2014. That institution reported a loss in total enrollment at their main campus of 11% between Fall 2012 and Fall 2014. Even though they have extensive distance education offerings, they continued to report almost no distance education enrollments. Meanwhile, a sister campus of that institution reported a 57% increase in total enrollment and a 227% increase in DE enrollment in the same period. Could they be reporting all of the main campus distance education enrollments through the sister campus? It’s possible, but that would be odd. The sister campus advertises extensive offerings of its own in academic programs that differ from the main campus. The reporting at this institution remains a mystery.

Reporting is Improving, but the 2012 Data is Still a Shaky Base

The IPEDS distance education data allows us to compare institutions using a consistent set of expectations provided by the IPEDS survey. Observing institutions’ IPEDS data reporting since 2012 suggests that they are gaining the experience and improving their systems and reporting processes to ensure that the data is an accurate reflection of distance education at their institutions. This data continues to inform the industry and the students it serves.

In 2014, Phil Hill and Russ Poulin wrote an opinion piece that stated that, with these uncertainties, the 2012 data served as a shaky baseline. We hold by that statement, but are encouraged by the progress in improved reporting.

This also means that some of the increases for distance education enrollments that we reported earlier this year may be due to addressing the procedural undercounts and not due to additional enrollments. Without having numbers for the undercounts, it is impossible to gauge the exact impact of students going unreported.

Keep the IPEDS Distance Education Questions in the Surveys

The U.S. Department of Education is considering massive cuts to its IPEDS reporting requirements. We can understand the interest in stopping the collection of data that is not used. We encourage the Department to keep the distance education questions in future versions of the IPEDS Fall Enrollment reports.

Even with the problems cited, this is still the best data available.

Terri Taylor Straut

Ascension Consulting

With help from….

Russ Poulin

Director, Policy and Analysis

WCET

Photo credit: “Confused” key from Morgue File.

2 replies on “Investigating IPEDS Distance Education Data Reporting: Progress Has Been Made”

[…] You can read a blog about this on the WCET Blog. In a nutshell, the federal government is proposing that each state have a rating of teacher prep programs (like, low-performing or at-risk) based on several indicators. That means there could be 51 different programmatic standards (each state plus the District of Columbia). […]

[…] Sourced through Scoop.it from: wcetblog.wordpress.com […]